Some of the 5-star reviews are jokes, and others are just bizarre!

Welcome to the droidymcdroidface-iest, Lemmyest (Lemmiest), test, bestest, phoniest, pluckiest, snarkiest, and spiciest Android community on Lemmy (Do not respond)! Here you can participate in amazing discussions and events relating to all things Android.

The rules for posting and commenting, besides the rules defined here for lemmy.world, are as follows:

1. All posts must be relevant to Android devices/operating system.

2. Posts cannot be illegal or NSFW material.

3. No spam, self promotion, or upvote farming. Sources engaging in these behavior will be added to the Blacklist.

4. Non-whitelisted bots will be banned.

5. Engage respectfully: Harassment, flamebaiting, bad faith engagement, or agenda posting will result in your posts being removed. Excessive violations will result in temporary or permanent ban, depending on severity.

6. Memes are not allowed to be posts, but are allowed in the comments.

7. Posts from clickbait sources are heavily discouraged. Please de-clickbait titles if it needs to be submitted.

8. Submission statements of any length composed of your own thoughts inside the post text field are mandatory for any microblog posts, and are optional but recommended for article/image/video posts.

Community Resources:

We are Android girls*,

In our Lemmy.world.

The back is plastic,

It's fantastic.

*Well, not just girls: people of all gender identities are welcomed here.

Our Partner Communities:

Some of the 5-star reviews are jokes, and others are just bizarre!

Seems like a huge nothingburger

GrapheneOS — an Android security developer — provides some comfort, that SafetyCore “doesn’t provide client-side scanning used to report things to Google or anyone else. It provides on-device machine learning models usable by applications to classify content as being spam, scams, malware, etc. This allows apps to check content locally without sharing it with a service and mark it with warnings for users.”

does this mean it's in GrapheneOS? i couldn't find it

It's nothing, until it incorrectly flags your family pics as CP, keeps a count of how often it does that even though the pics are long gone, and a cop checks your phone for some reason.

Or until it gets programmed to also flag users who have specific memes on their phone, or it starts running sentiment analysis on your memes and puts you on a list of potential dissenters to the coup.

Oh, so it's an on-device service initially used to stop accidental sharing of nudes and available as a service call to other apps for that and other types of content.

Look, I'm not saying you can't be creeped out by phones automatically categorizing the content of your photos. I'm saying you're what? Five, ten years late to that and killing this instance won't do much about it. Every gallery app on Android is doing this. How the hell did you think searching photos by content was working? The default Android Gallery app by Google does it, Google Photos sure as hell does it, Samsung's Gallery does it. The very minimum I've seen is face matching for people.

This is one of those things where gen-AI panic gets people to finally freak out about things big data brokers have been doing for ages (using ML, too).

Every gallery app on Android is doing this.

FossifyGallery is not doing this.

It's almost a certainty they're checking the hashes of your pics against a database of known csam hashes as well. Which, in and of itself isn't necessarily wrong, but you just know scope creep will mean they'll be checking for other "controversial" content somewhere down the line...

That does not seem to be the case at all, actually. At least according to the GrapheneOS devs. The article quotes them on this and links a source tweet.

Since... you know, Twitter, here's the full text:

Neither this app or the Google Messages app using it are part of GrapheneOS and neither will be, but GrapheneOS users can choose to install and use both. Google Messages still works without the new app.

The app doesn't provide client-side scanning used to report things to Google or anyone else. It provides on-device machine learning models usable by applications to classify content as being spam, scams, malware, etc. This allows apps to check content locally without sharing it with a service and mark it with warnings for users.

It's unfortunate that it's not open source and released as part of the Android Open Source Project and the models also aren't open let alone open source. It won't be available to GrapheneOS users unless they go out of the way to install it.

We'd have no problem with having local neural network features for users, but they'd have to be open source. We wouldn't want anything saving state by default. It'd have to be open source to be included as a feature in GrapheneOS though, and none of it has been so it's not included.

Google Messages uses this new app to classify messages as spam, malware, nudity, etc. Nudity detection is an optional feature which blurs media detected as having nudity and makes accessing it require going through a dialog.

Apps have been able to ship local AI models to do classification forever. Most apps do it remotely by sharing content with their servers. Many apps have already have client or server side detection of spam, malware, scams, nudity, etc.

Classifying things like this is not the same as trying to detect illegal content and reporting it to a service. That would greatly violate people's privacy in multiple ways and false positives would still exist. It's not what this is and it's not usable for it.

GrapheneOS has all the standard hardware acceleration support for neural networks but we don't have anything using it. All of the features they've used it for in the Pixel OS are in closed source Google apps. A lot is Pixel exclusive. The features work if people install the apps.

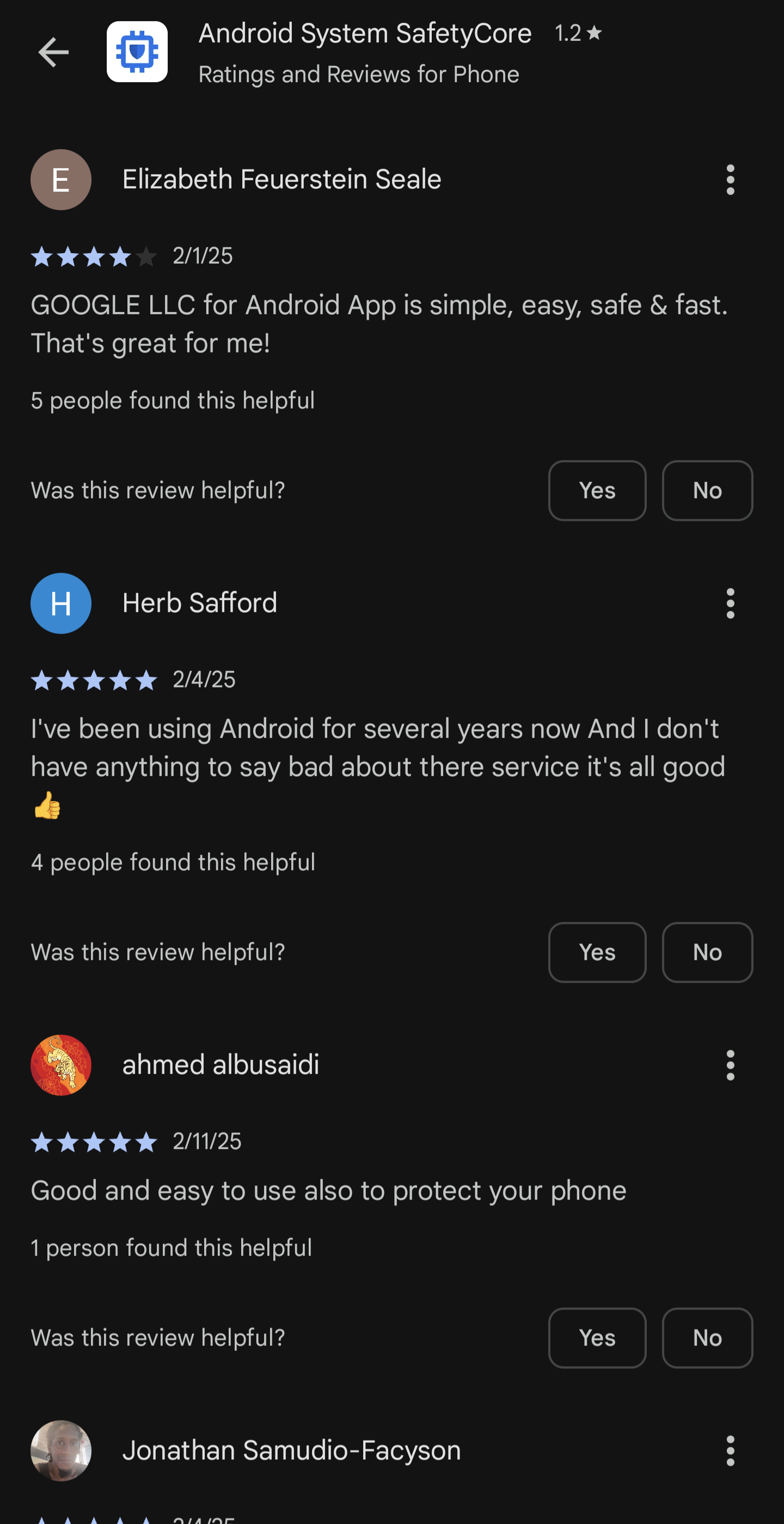

After deleting googles safetycore, do the following to ensure Google doesn't reinstall it.

it might take some messing with Obtainiums settings to ensure that the latest version of the saftycore placeholder is installed so here is an export of my settings just for this app. Just copy the text and save it as a json to import the settings.

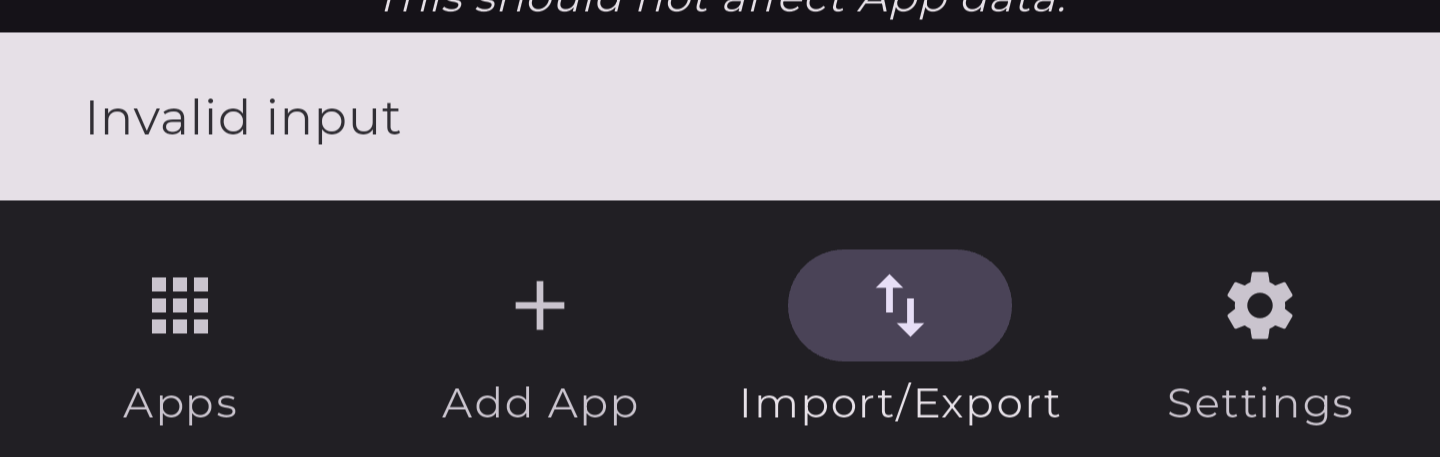

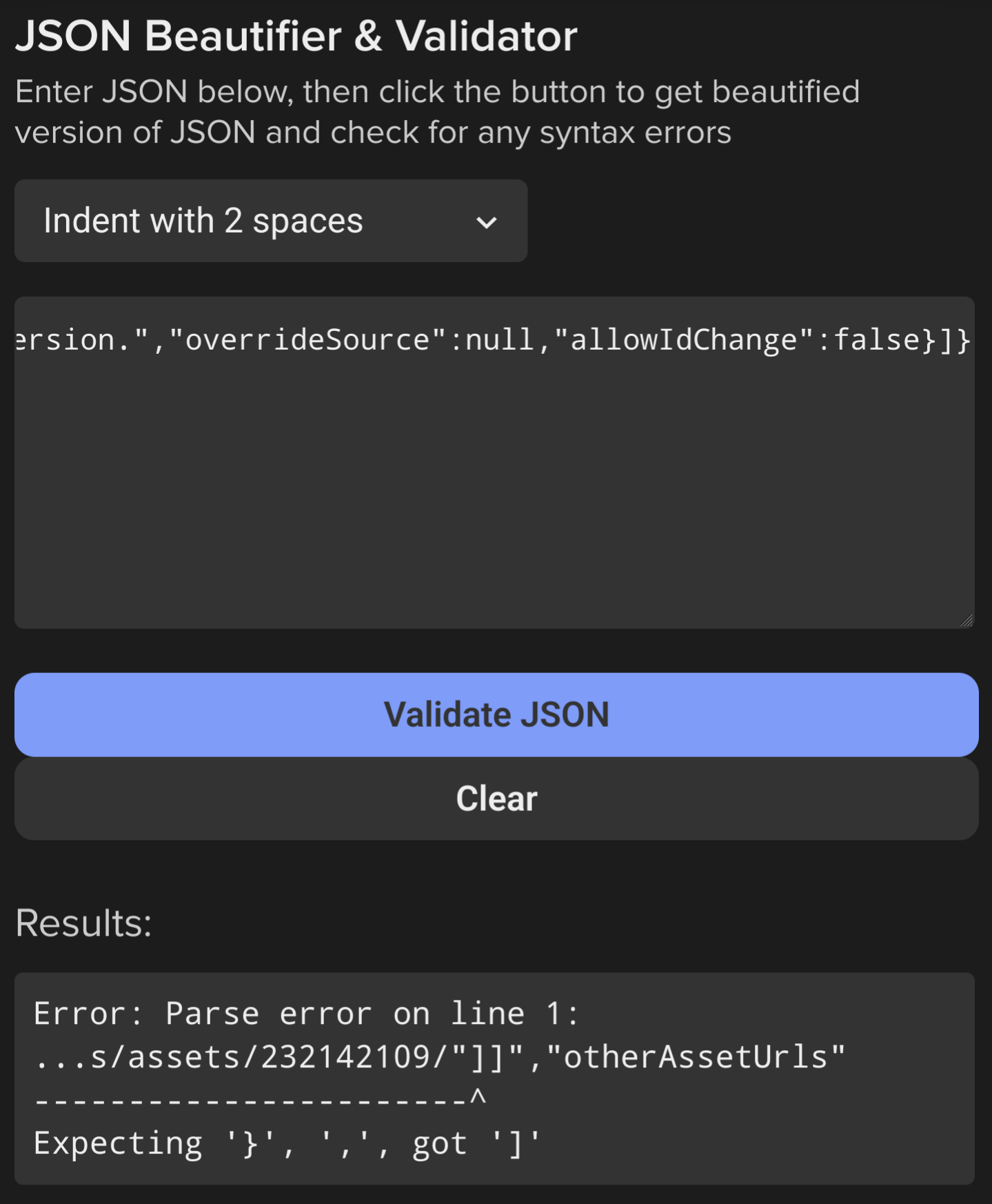

{"apps":[{"id":"com.google.android.safetycore","url":"https://github.com/daboynb/Safetycore-placeholder","author":"daboynb","name":"com.google.android.safetycore","installedVersion":"v3.0","latestVersion":"v3.0","apkUrls":"[[\"Safetycore-placeholder.apk\",\"https://api.github.com/repos/daboynb/Safetycore-placeholder/releases/assets/232142109/"]]","otherAssetUrls":"[[\"v3.0.tar.gz\",\"https://api.github.com/repos/daboynb/Safetycore-placeholder/tarball/v3.0/"],[\"v3.0.zip\",\"https://api.github.com/repos/daboynb/Safetycore-placeholder/zipball/v3.0/"]]","preferredApkIndex":0,"additionalSettings":"{\"includePrereleases\":false,\"fallbackToOlderReleases\":false,\"filterReleaseTitlesByRegEx\":\"\",\"filterReleaseNotesByRegEx\":\"\",\"verifyLatestTag\":true,\"dontSortReleasesList\":false,\"useLatestAssetDateAsReleaseDate\":true,\"releaseTitleAsVersion\":true,\"trackOnly\":false,\"versionExtractionRegEx\":\"\",\"matchGroupToUse\":\"\",\"versionDetection\":false,\"releaseDateAsVersion\":false,\"useVersionCodeAsOSVersion\":true,\"apkFilterRegEx\":\"\",\"invertAPKFilter\":false,\"autoApkFilterByArch\":true,\"appName\":\"\",\"appAuthor\":\"\",\"shizukuPretendToBeGooglePlay\":false,\"allowInsecure\":false,\"exemptFromBackgroundUpdates\":false,\"skipUpdateNotifications\":false,\"about\":\"\",\"refreshBeforeDownload\":true}","lastUpdateCheck":1740740003732409,"pinned":false,"categories":[],"releaseDate":1740416435000000,"changeLog":"This release is signed, you need to uninstall the previuos version.","overrideSource":null,"allowIdChange":false}]}

This doesn't load in Obtainium, and trying to validate the json gets the error below.

It looks like the value should be a simple list of strings, but everything is escaped and it has double square brackets, and it's enclosed in quotes.

Why would obtainium be needed instead of installing the apk directly?

Oh yes you can install directly. Obtainium will install help with upgrading to new versions however as they get released.

You can install it directly, I have.

As one Google Play Store customer said: "No consent given, install could not be paused or stopped. I watched it install itself on my phone on January 22, 2025 (couldn't pause or cancel it) AND it did all of that over mobile network (my settings are to never download or install anything unless I'm on Wi-Fi). Description tells you nothing. Permissions are for virtually EVERYTHING."

...

However, some have reported that SafetyCore reinstalled itself during system updates or through Google Play Services, even after uninstalling the service. If this happens, you'll need to uninstall SafetyCore again, which is annoying.

I've said that this is possible multiple times and people have straight up to me "you don't understand how android security works - this is impossible". Well, here we are...

I have pictures of my kids doing silly things in the tub. This is gonna be fun.

I'd remove those from your backups. There was a situation a while back where a guy has his Google account shut down because he sent pictures of his child's skin rash to his doctor, and the photos were flagged as CSAM when he uploaded them.

Edit: A bit late, but here's an article about it: https://www.theguardian.com/technology/2022/aug/22/google-csam-account-blocked

Well pretty soon the FBI will have them too!

For EVERYBODY'S SAFETY

I think Elon and trump are just fishing for the freshest of meat to beat their meat to

Same. What parent doesn't have tub pics of their little kids? This is concerning and pushing me closer to Calyx/Graphene.

As the commenter above you mentioned regarding doctors, I have photos that probably look worse than fun in the bath pictures. As a parent, I have taken at least a handful of close up photos of genitals (and in earlier years, anuses) to show to a doctor with a befuddled and embarrassed tone of "so, is this normal? It looks weird, I was worried!" I mean to delete them, but sometimes I forget until I run into them accidentally much later on. TBH, I wish it was only on my device with no Google photos syncing, but I have also sent those to a friend's wife who is a pediatrician for a sanity check before I go on wasting a doctor's appointment over nothing...

I recently volunteered at my kids school and had to take the mandatory reporter training, which taught me that along with teachers, coaches, etc, people working at photo labs are also mandated reporters (in my state in the US), which really drove it home... I can only imagine some poor photo tech at Walmart in the 90s having to deal with finding and reporting child abuse, fuck....

You can also use canta to completely uninstall the app if it's not letting you.