How do they plan to enforce this................

Technology

A nice place to discuss rumors, happenings, innovations, and challenges in the technology sphere. We also welcome discussions on the intersections of technology and society. If it’s technological news or discussion of technology, it probably belongs here.

Remember the overriding ethos on Beehaw: Be(e) Nice. Each user you encounter here is a person, and should be treated with kindness (even if they’re wrong, or use a Linux distro you don’t like). Personal attacks will not be tolerated.

Subcommunities on Beehaw:

This community's icon was made by Aaron Schneider, under the CC-BY-NC-SA 4.0 license.

You wouldn't download... a BRAIN?!?!

I love how we're now the crumbling evil empire trying to ban their way out of the present.

yeah, it really seems that way. close the borders so that the future can't get it. i love that, i must remember it.

I fear I've become something of an accelerationist in the past few days...

yeah, go ahead and pass this, you tech-illiterate xenophobic fucks.

we need to divide and conquer the fascist coalition. make them hate each other. make them consumed by infighting. give them more "oh I didn't realize there would be negative consequences that affected me personally" moments.

there's a whole lot of Silicon Valley techbro types who are on board with Musk and Trump because they think it's all lower taxes, less regulations for their startups, and less "wokeness". go ahead, pass a law that makes it a federal crime for them to click a GitHub download link. make it so that every Hacker News thread about AI is filled with American engineers bemoaning that they're legally prohibited from keeping up with the state-of-the-art. make their startups uncompetitive because they're required by law to pay inflated prices to subsidize OpenAI and other "American-made" plagiarism machines.

I could understand banning closed source models but open sourced models that work better than anything propriety isn't that just the free market that corporations like to pretend to be part of?

Define "open sourced model".

The neural network is still a black box, with no source (training data) available to build it, not to mention few people have the alleged $5M needed to run the training even if the data was available.

Define “open sourced model”.

The term itself is actually shockingly simple. Source is the original material that was used to build this model, training data and all files that are needed to compile and create the model. It's Open Source, if these files are available (preferably with an Open Source compatible license). It's not. We only get binary data, the end result and some intermediate files to fine tune it.

They were only for the free market if they could force it on others.

Well its still not Open Source.

Is part of the code not available?

None of the code and training data is available. Its just the usual Huggingface thing, where some weights and parameters are available, nothing else. People repeat DeepSeek (and many other) Ai LLM models being open source, but they aren't.

They even have a Github source code repository at https://github.com/deepseek-ai/DeepSeek-R1 , but its only an image and PDF file and links to download the model on Huggingface (plus optional weights and parameter files, to fine tune it). There is no source code, and no training data available. Also here is an interesting article talking about this issue: Liesenfeld, Andreas, and Mark Dingemanse. “Rethinking open source generative AI: open washing and the EU AI Act.” The 2024 ACM Conference on Fairness, Accountability, and Transparency. 2024

Damn that sucks it should be open source. Let people fork and optimize it so it uses less electricity as possible.

This literally took one click: https://github.com/deepseek-ai

Stop spreading FUD.

Where's the training data?

Does open sourcing require you to give out the training data? I thought it only means allowing access to the source code so that you could build it yourself and feed it your own training data.

Open source requires giving whatever digital information is necessary to build a binary.

In this case, the "binary" are the network weights, and "whatever is necessary" includes both training data, and training code.

DeepSeek is sharing:

- NO training data

- NO training code

- instead, PDFs with a description of the process

- binary weights (a few snapshots)

- fine-tune code

- inference code

- evaluation code

- integration code

In other words: a good amount of open source... with a huge binary blob in the middle.

Is there any good LLM that fits this definition of open source, then? I thought the "training data" for good AI was always just: the entire internet, and they were all ethically dubious that way.

What is the concern with only having weights? It's not abritrary code exectution, so there's no security risk or lack of computing control that are the usual goals of open source in the first place.

To me the weights are less of a "blob" and more like an approximate solution to an NP-hard problem. Training is traversing the search space, and sharing a model is just saying "hey, this point looks useful, others should check it out". But maybe that is a blob, since I don't know how they got there.

There are several "good" LLMs trained on open datasets like FineWeb, LAION, DataComp, etc. They are still "ethically dubious", but at least they can be downloaded, analyzed, filtered, and so on. Unfortunately businesses are keeping datasets and training code as a competitive advantage, even "Open"AI stopped publishing them when they saw an opportunity to make money.

What is the concern with only having weights? It's not abritrary code exectution

Unless one plugs it into an agent... which is kind of the use we expect right now.

Accessing the web, or even web searches, is already equivalent to arbitrary code execution: an LLM could decide to, for example, summarize and compress some context full of trade secrets, then proceed to "search" for it, sending it to wherever it has access to.

Agents can also be allowed to run local commands... again a use we kind of want now ("hey Google, open my alarms" on a smartphone).

There are several “good” LLMs trained on open datasets like FineWeb, LAION, DataComp, etc.

Then use those as training data. You're too caught up on this exacting definition of open source that you'll completely ignore the benefits of what this model could provide.

an LLM could decide to, for example, summarize and compress some context full of trade secrets, then proceed to “search” for it, sending it to wherever it has access to.

That's not how LLMs work, and you know it. A model of weights is not a lossless compression algorithm.

Also, if you're giving an LLM free reign to all of your session tokens and security passwords, that's on you.

That's not how LLMs work, and you know it. A model of weights is not a lossless compression algorithm.

https://www.piratewires.com/p/compression-prompts-gpt-hidden-dialects

if you're giving an LLM free reign to all of your session tokens and security passwords, that's on you.

There are more trade secrets than session tokens and security passwords. People want AI agents to summarize their local knowledge base and documents, then expand it with updated web searches. No passwords needed when the LLM can order the data to be exfiltrated directly.

Those security concerns seem completely unrelated to the model, though. You can have a completely open source model that fits all those requirements, and still give it too much unfettered access to important resources with no way of actually knowing what it will do until it tries.

While unfettered access is bad in general, DeepSeek takes it a step farther: the Mixture of Experts approach in order to reduce computational load, is great when you know exactly what "Experts" it's using, not so great when there is no way to check whether some of those "Experts" might be focused on extracting intelligence under specific circumstances.

I agree that you can't know if the AI has been deliberately trained to act nefarious given the right circumstances. But I maintain that it's (currently) impossible to know if any AI had been inadvertently trained to do the same. So the security implications are no different. If you've given an AI the ability to exfiltrating data without any oversight, you've already messed up, no matter whether you're using a single AI you trained yourself, a black box full of experts, or deepseek directly.

But all this is about whether merely sharing weights is "open source", and you've convinced me that it's not. There needs to be a classification, similar to "source available"; this would be like "weights available".

Nobody releases training data. It's too large and varied. The best I've seen was the LAION-2B set that Stable Diffusion used, and that's still just a big collection of links. Even that isn't going to fit on a GitHub repo.

Besides, improving the model means using the model as a base and implementing new training data. Specialize, specialize, specialize.

Nobody releases training data. It’s too large and varied.

That's why its not Open Source. They do not release the source and its impossible to build the model from source.

Can you actually explain what in my reply is "Fear, uncertainty, and doubt"? Did you actually read it? I even linked to the specific github repository, which is basically empty. You just link to an overview, which does not point to any source code.

Please explain whats FUD and link to the source code, otherwise do not call people FUD if you don't know what you are talking about.

You're purposely being obtuse, and not arguing in good faith. The source code is right there, in the other repos owned by the deepseek-ai user.

What are you talking about? What bad faith are you saying to me? I ask you to show me the repository that contains the source code. There is none. Please give me a link to the repo you have in mind. Where is the source code and training data of DeepSeek-R1? Can we build the model from source?

It's also the free market for those corporations to buy a government and use it to outlaw competition.

So, I'm just kind of curious how this would even work. Lots of people in the US already have Deepseek. If they already have it that's not importing it, is it? What if someone makes a copy of Deepseek from a server that's in the US? Is that importing it? Are we just trying to block future AIs? How is it even supposed to be beneficial to the US for the people working on AI here to have no access to Chinese models, when China can still freely use ours? Won't that just give them an advantage in developing AI?

Honestly, the more I think about this, the dumber it gets, and it was already pretty stupid on a surface level. It'll probably pass though. I don't think anybody in Washington DC is even interested in thinking about the consequences of anything they're doing. It's all pure pageantry.

Just rename the model like they renamed gulf of Mexico.

ShallowReveal

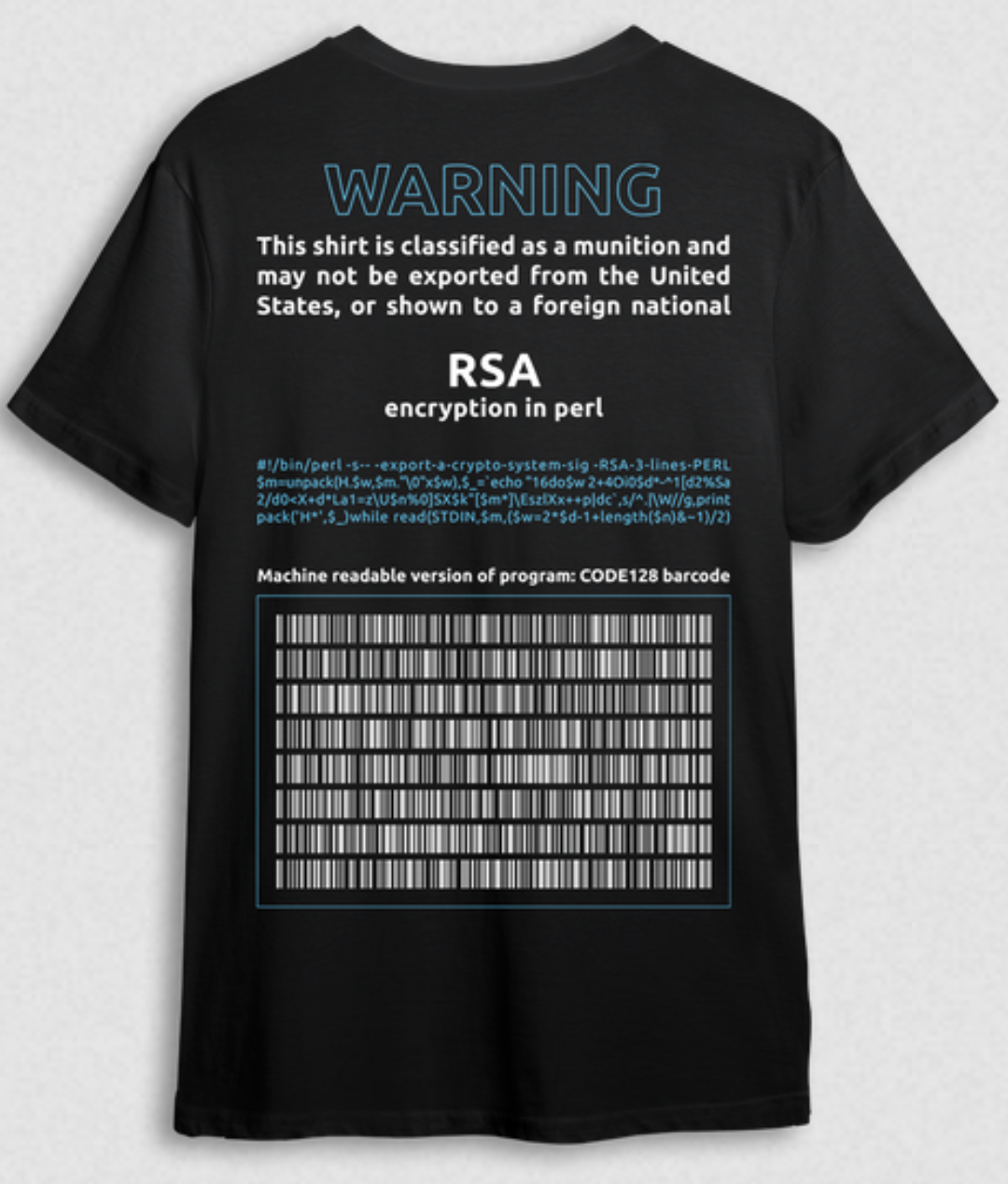

BRB about to make a derivative of this shirt

Gonna need a little higher density barcode lol

Oh ffs. Fuck right off congress.

Well I wasn't planning on downloading Deepseek, but now I feel like I gotta...

Can you prevent someone from setting up local instances of Deepseek? It's open source. How would this define Chinese models?

Nobody cares about you and your cheap AI-generated tentacle porn. The point here is at entreprise-level. Businesses will be legally locked down with expensive US vendors, it's all that matters.

Infuriating thing above all that cretin protectionism is that pro use of AI stuff will consume a planet-destroying ~~10~~ 30 times as much energy than needed.

They can criminalize downloading it for example.

the importation into the United States of artificial intelligence or generative artificial intelligence technology or intellectual property developed or produced in the People’s Republic of China is prohibited.

This guy might get a bill through that bans Chinese AI stuff, though I think that enforcement is gonna be a pain, but as per the text, this is banning all Chinese intellectual property, AI or not. That's a non-starter; it's not going to go anywhere in Congress. Like, you couldn't even identify all instances of Chinese intellectual property if you wanted to do so.

EDIT: Okay, they define the phrase elsewhere to specifically be "technology or intellectual property that could be used to contribute to artificial intelligence or generative artificial intelligence capabilities", which is somewhat-narrower but still not going anywhere, because pretty much any form of intellectual property meets that bar; you can train an AI on whatever to improve its capabilities.

Get your DeepSeek3 and r1 weights before it's illegal!

They would be illegal in the US only, not the rest of the world. Meaning you can get it somewhere else.

Print it on a t shirt!

09 F9 11 02 9D 74 E3 5B D8 41 56 C5 63 56 88 C0

Just use a small font. /s