I am probably unqualified to speak about this, as I am using an RX 550 low profile and a 768P monitor and almost never play newer titles, but I want to kickstart a discussion, so hear me out.

The push for more realistic graphics was ongoing for longer than most of us can remember, and it made sense for most of its lifespan, as anyone who looked at an older game can confirm - I am a person who has fun making fun of weird looking 3D people.

But I feel games' graphics have reached the point of diminishing returns, AAA studios of today spend millions of dollars just to match the graphics' level of their previous titles - often sacrificing other, more important things on the way, and that people are unnecessarily spending lots of money on electricity consuming heat generating GPUs.

I understand getting an expensive GPU for high resolution, high refresh rate gaming but for 1080P? you shouldn't need anything more powerful than a 1080 TI for years. I think game studios should just slow down their graphical improvements, as they are unnecessary - in my opinion - and just prevent people with lower end systems from enjoying games, and who knows, maybe we will start seeing 50 watt gaming GPUs being viable and capable of running games at medium/high settings, going for cheap - even iGPUs render good graphics now.

TLDR: why pay for more and hurt the environment with higher power consumption when what we have is enough - and possibly overkill.

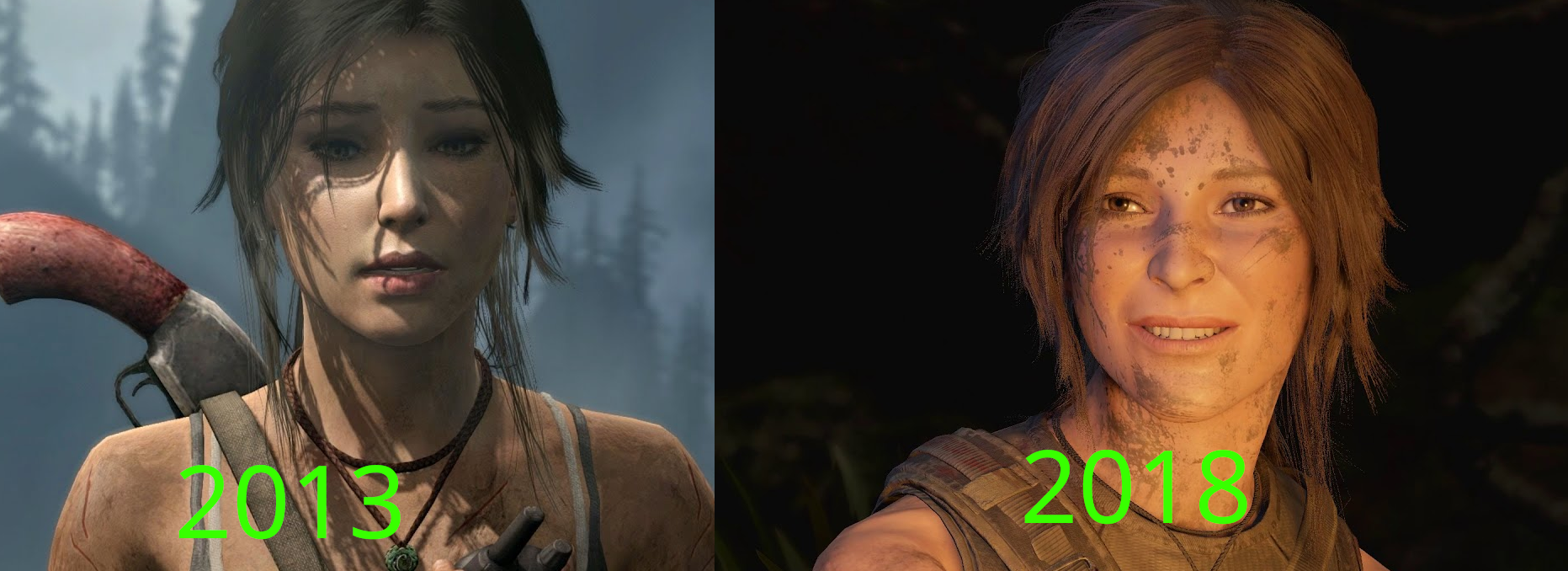

Note: it would be insane of me to claim that there is not a big difference between both pictures - Tomb Raider 2013 Vs Shadow of the Tomb raider 2018 - but can you really call either of them bad, especially the right picture (5 years old)?

Note 2: this is not much more that a discussion starter that is unlikely to evolve into something larger.

I already wrote another comment on this, but to sum up my thoughts in a top comment:

Most (major) games nowadays don’t look worlds better than the Witcher 3 (2015), but they still play the same as games from 2015 (or older), while requiring much better hardware with high levels of energy consumption. And I think it doesn't help that something like an RTX 4060 card (power consumption of a 1660 with performance slightly above a 3060) gets trashed for not providing a large increase in performance.

Its not so much the card itself, its the price and false market around it (2 versions to trick the average buyer, one with literally double the memory). Also its a downgrade from previous gen now cheaper 3070. Its corporate greed with purpose misleading. If the card was 100 € cheaper, it would be actually really good. I think that is the census on reviewers like GN but don’t quote a random dude on the Internet ahah

It's less of the fact that there is a version with double the memory and more of the fact that the one with less memory has a narrower memory bus than the previous generation resulting in worse performance than the previous generation card in certain scenarios.

I don't know about the 2 versions, but the 3070 bit is part of what I mean.

Price has been an issue with all hardware recently - even in regard to other things due to inflation in the last few years - but it's not exclusive to the 4060. But more importantly, from what I can tell, the 3070 has a 1.2x to 1.4x increase in performance in games, but it consumes about 1.75x the power (rough numbers, i'm kind busy rn). Because I don't have much time right now I can't look at prices, but when you consider the massive difference in consumption, the price different might start making more sense and only seem ridiculous if you just focus on power.

I remember seeing an article somewhere about this. Effectively, there really bad diminishing returns with these game graphics. You could triple the detail, but there's only so much that can fit on the screen, or in your eyes.

And at the same time, they're bloating many of these AAA games sizes with all manner of garbage too, while simultaneously cutting the corners of what is actually good about them.

There's definitely something to be said about proper use of texture quality. Instead of relying on VRAM to push detail, for games that go for realism I think it's interesting to look at games like Battlefield 1 - which even today looks incredible despite very clearly having low quality textures. Makes sense - the game is meant to be you running around and periodically stopping, so the dirt doesn't need to be much more than some pixelated blocks. On the other hand, even just looking at the ground of Baldur's Gate 3 looks like the polish rest of Battlefield 1 visual appeal.

Both these games are examples of polish put in the right places (in regards to visual aesthetics) and seem to benefit from it greatly with not a high barrier for displaying it. Meanwhile still visually compelling games like 2077 or RDR2 do look great overall but just take so much more resources to push those visuals. Granted there's other factors at play like genre which of course dictates other measures done to maintain the ratio of performance and fidelity and both these games are much larger in scope.

I wish they played more like games from the late 90's, early 2000's, instead of stripping out a lot of depth in favor of visuals. Back then, I expected games to get more complex and look better. Instead, they've looked better, but played worse each passing year.