Zero context to this...

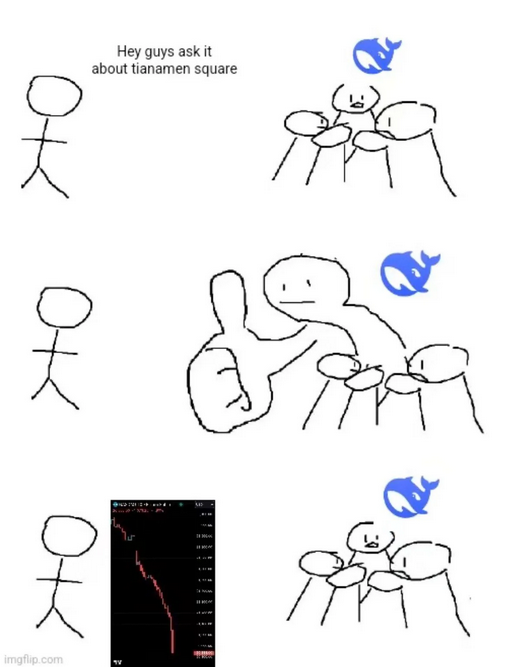

My experience with Deepseek R1 is that it's quite "unbound" by itself, but the chat UI (and maybe the API? Not 100% sure about that) does seem to be more aligned.

All the open Chinese LLMs have been like this, rambling on about Tiananmen Square as much as they can, especially if you ask in English. The devs seem to like "having their cake and eating it," complying with the govt through the most publicly visible portals while letting the model rip underneath.