I am probably unqualified to speak about this, as I am using an RX 550 low profile and a 768P monitor and almost never play newer titles, but I want to kickstart a discussion, so hear me out.

The push for more realistic graphics was ongoing for longer than most of us can remember, and it made sense for most of its lifespan, as anyone who looked at an older game can confirm - I am a person who has fun making fun of weird looking 3D people.

But I feel games' graphics have reached the point of diminishing returns, AAA studios of today spend millions of dollars just to match the graphics' level of their previous titles - often sacrificing other, more important things on the way, and that people are unnecessarily spending lots of money on electricity consuming heat generating GPUs.

I understand getting an expensive GPU for high resolution, high refresh rate gaming but for 1080P? you shouldn't need anything more powerful than a 1080 TI for years. I think game studios should just slow down their graphical improvements, as they are unnecessary - in my opinion - and just prevent people with lower end systems from enjoying games, and who knows, maybe we will start seeing 50 watt gaming GPUs being viable and capable of running games at medium/high settings, going for cheap - even iGPUs render good graphics now.

TLDR: why pay for more and hurt the environment with higher power consumption when what we have is enough - and possibly overkill.

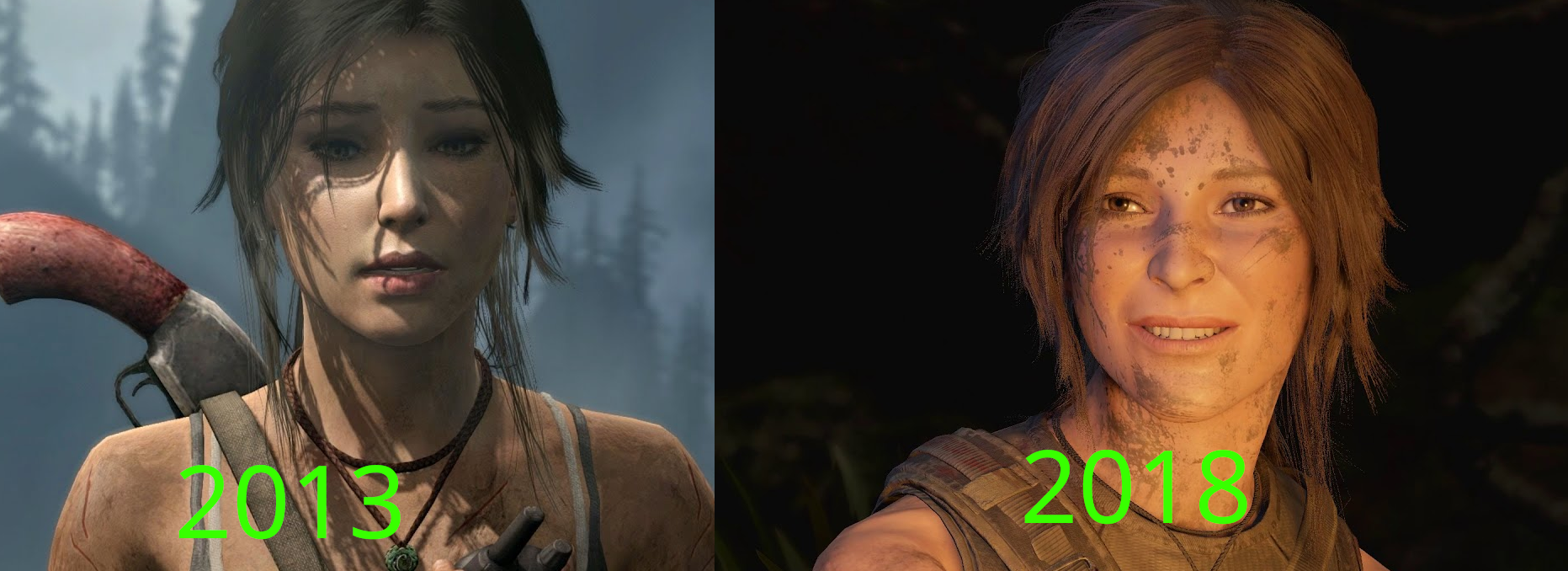

Note: it would be insane of me to claim that there is not a big difference between both pictures - Tomb Raider 2013 Vs Shadow of the Tomb raider 2018 - but can you really call either of them bad, especially the right picture (5 years old)?

Note 2: this is not much more that a discussion starter that is unlikely to evolve into something larger.

EDIT: Never mind, I thought that was a sarcastic comment mocking the other user.

And what's wrong with that, exactly? Would you prefer broken games made by under paid and overworked people?

As for "worse graphics", AC: Unity came out in 2014, The Witcher 3 came out in 2015, and the Arkham Knight is also from 2015. All of those have technically worse graphics, but they don't look much different from modern games that need much beefier systems to run.

And here's AC: Unity compared to a much more modern game.

I'm pretty sure that's in support of the concept.

Ah, by bad. I didn't even realize it was a known quote, I just thought it was a sarcastic reply making fun of the other user.

It's from a tweet. It's earnest. You can google the quote to get more context.

You picked the absolute best examples of their respective years while picking the absolute worst example of the current year, that makes the comparison a bit partial, doesn't it? Why not compare them to Final Fantasy XVI or one of the remakes like Dead Space or Resident Evil 4? Or pick the worst example of previous years, like Rambo: The Video Game (2014)? While good graphics don't make a good game, better hardware allows devs to spend less time doing better graphics. 2 of the 3 examples you gave have static lightning (ACU and BAK), while the bad example you gave have dynamic lightning. Baking static lightning into the map is a huge time consuming factor while making a game, I assure you that from my second hand experience that at least 1 of those 2 games you mentioned had to compromise gameplay because they couldn't change the map after the light-baking was done. And I'm just scratching the surface on the amount of things that are time consuming when making good graphics like the games you mentioned. As an example, you have the infamous "squeezing through the cap" cutscene that a lot of AAA of the last generation had, because it allowed the game to load the next area. That was time wasted on choosing the best times to do it, recording the scenes, scripting, testing etc. etc. All because the hardware was a limiting factor. Now that consumers have better hardware that isn't a problem anymore, but consumers had to upgrade to allow it. That was also true for a lot of other techniques like Tessellation, GPU Particles etc. The consumers had all to upgrade to allow devs to make the game prettier with less cost. And it will also be true with ray-tracing and Nanite, both cut a LOT of dev time while making the game prettier but requires the consumers to upgrade their hardware. Graphics are not all about looks, it is also about facilitating dev time which makes the worst looking graphics look better. If Rambo The Video Game (2014) was made with the tech of today, it would look much better while costing the devs the same amount of time. Please don't see make comment as a critique, I'm just trying to make you understand that not everything is black and white, especially on something that is as complex as AAA development.

EDIT: I guess the absolute worst example of the current year would be Gollum, not Forspoken.

I don't think this is quite correct. A while back devs were talking about a AAApocalypse. Basically as budgets keep on growing, having a game make its money back is exceedingly hard. This is why today's games need all sorts of monetisation, are always sequels, have low-risk game mechanics, and ship in half broken states. Regardless of the industry basically abandoning novel game engines to focus on Unreal (which is also a bad thing for other reasons), game production times are increasing, and the reason is that while some of the time is amortised, the greater graphical fidelity makes the lower fidelity work stand out. I believe an "indie" or even AA game could look better today for the same amount of effort than 10 years ago, but not a AAA game.

For example, you could not build Baldur's Gate 3 in Unreal. This is an unhealthy state for the industry to be in.

Yeah, I agree with everything you said. But what I was trying to say is that it is not all of the graphics push that are hurting production, I believe that on this generation alone we have many new graphics techniques that are aiming to improve image quality at the same time that it takes the load out of the devs. Just look at Remnant II that has the graphical fidelity of a AAA but the budget of a AA. Also, some of the production time is increasing due to feature creep that a lot of games have. Every new game has to have millions of fetch quests, a colossal open world map, skill trees, online mode, crafting, looting system etc. etc. Even if it makes no sense for the game to have it. Almost every single game mentioned on this thread suffers from this. With Batman being notorious for their Riddler trophies, The Witcher having more question marks on the map than an actual map, and Assassin's Creed... Well, do I even need to mention it? So the production time increase is not all the fault of the increase in graphical fidelity.