I am probably unqualified to speak about this, as I am using an RX 550 low profile and a 768P monitor and almost never play newer titles, but I want to kickstart a discussion, so hear me out.

The push for more realistic graphics was ongoing for longer than most of us can remember, and it made sense for most of its lifespan, as anyone who looked at an older game can confirm - I am a person who has fun making fun of weird looking 3D people.

But I feel games' graphics have reached the point of diminishing returns, AAA studios of today spend millions of dollars just to match the graphics' level of their previous titles - often sacrificing other, more important things on the way, and that people are unnecessarily spending lots of money on electricity consuming heat generating GPUs.

I understand getting an expensive GPU for high resolution, high refresh rate gaming but for 1080P? you shouldn't need anything more powerful than a 1080 TI for years. I think game studios should just slow down their graphical improvements, as they are unnecessary - in my opinion - and just prevent people with lower end systems from enjoying games, and who knows, maybe we will start seeing 50 watt gaming GPUs being viable and capable of running games at medium/high settings, going for cheap - even iGPUs render good graphics now.

TLDR: why pay for more and hurt the environment with higher power consumption when what we have is enough - and possibly overkill.

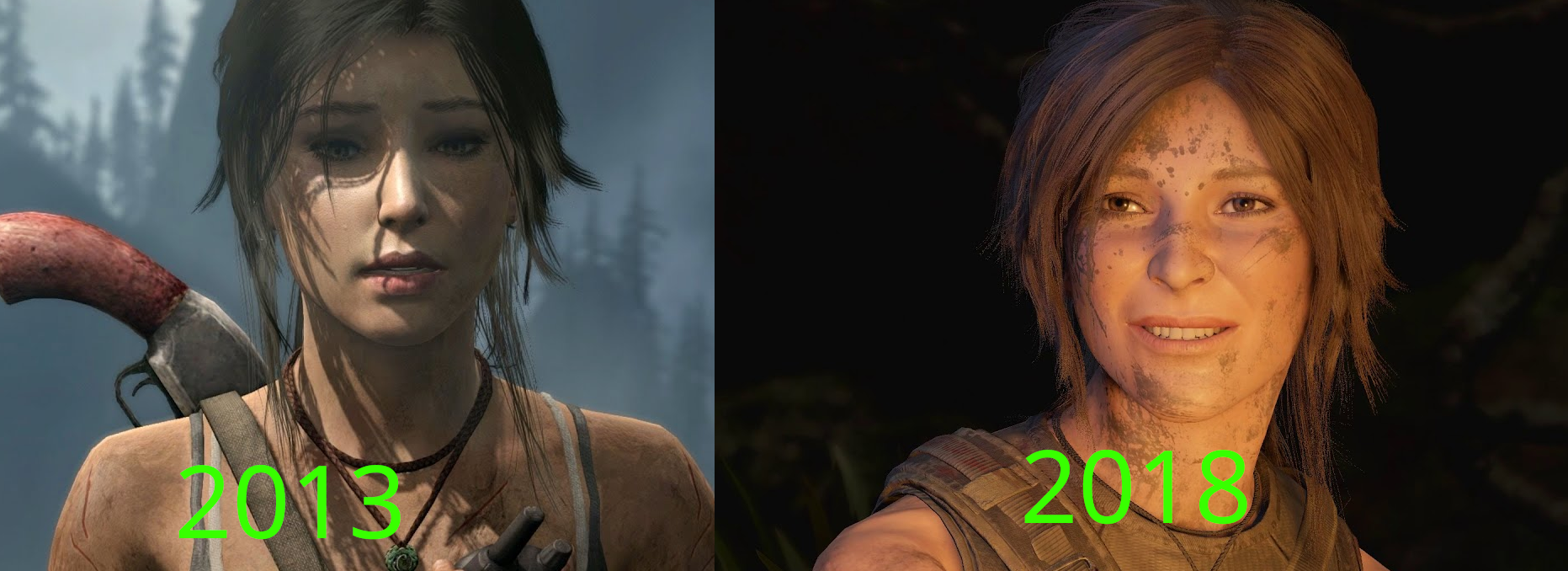

Note: it would be insane of me to claim that there is not a big difference between both pictures - Tomb Raider 2013 Vs Shadow of the Tomb raider 2018 - but can you really call either of them bad, especially the right picture (5 years old)?

Note 2: this is not much more that a discussion starter that is unlikely to evolve into something larger.

It depends on the type of game for me. For (competitive) multiplayer games, I don't really care much. As long as it looks coherent and artistically well done, I'm fine with it.

For single player games, especially story-based games, I like when there's a lot of emphasis on graphical fidelity. Take The Last of Us: Part II for example. To me, especially in the (real-time) cutscenes, the seemingly great synergy between artists and engine developers really pays off. You can visually see how a character feels, emotions and tensions come across so well. Keep in mind they managed to do this on aging PS4 hardware. They didn't do anything revolutionary per se with this game in terms of graphical fidelity (no fancy RT or whatever), but they just combined everything so well. Now, would I have enjoyed the game if it looked significantly worse? Probably yes, but I have to say that the looks of the game likely made me enjoy it more. Low resolution textures, shadows or unrealistic facial impressions would've taken away from the immersion for me. Now, some would say that the gameplay of TLoU:II was rather bland because it didn't add or change a lot over TLoU (1), but for me, bringing the story across in this very precise way was what made it a great game (people will have other opinions, but that's fine).

I agree with you on the power consumption part though. Having GPUs consuming north of 400 watts while playing a game is insane. I have a 3080 and it's what, 340 watts TDP? In reality it consumes around 320 watts or whatever under full load, but that's a lot of power for playing games. Now, this generation GPUs are a lot more efficient in theory (at least on the Nvidia side, a 4070 uses 100-150 watts less to achieve the same output as a 3080), which is good.

But there's a trend in recent generations where manufacturers set their GPUs (and CPUs) to be way beyond their best power/performance ratio, just to "win" over the competition in benchmarks/reviews. Sure you can tweak it, but in my opinion, it should be the other way around. Make the GPUs efficient by default and give people headroom to overclock with crazy power budgets if they choose to.

I remember when AMD released the FX-9590 back in 2013 and they got absolutely laughed at because it had a TDP of 220 watts (I know, TDP != actual power consumption, but it was around 220). Nowadays, a 13900K consumes 220 watts out of the box no problem and then some, and people are like "bUt LoOk At ThE cInEbEnCh ScOrE!!111!111". Again, you can tweak it, but out of the box it sucks power like nobody's business.

This needs to improve again. Gaming GPUs should cap out at 250 watts at the high-end, and CPUs at like 95 watts, with mainstream GPUs targeting around 150 watts and CPUs probably around 65 watts.